Fraud is a common use-case for machine learning algorithms. It is also a very hard problem to tackle. Not only is it a cat and mouse game; it is also a low-signal vs. noise environment.

Despite this it is not uncommon to hear about success stories, especially at tech conferences. What follows is an imaginary story based on talks I’ve had with folks at said tech conferences. It will illustrate something crazy that is bound to happen somewhere, someday.

Succes

Let’s imagine you work at a financial firm.

Your team just spent a month running gridserarch. Squeezing every last drop of accuracy out of your cross-validations. But it was all well spent; your model went to production.

And not just any model. One that is very accurate at detecting cases of fraud at the financial institution that you work for. This is not only confirmed by your cross validated experiments on the offline dataset but also in the field. It has proven itself to such an extend that the fraud teams are spending most of their time prosecuting. Gone are the days of searching/investigating for fraudsters.

Raises are given to your team. Promotions are handed out too. White-papers get written. And to put the cherry on top; you get the opportunity to brag about it at tech conferences.

Cost

One might wonder though. Presumably there’s plenty of people at this financial firm who have been fighting fraud for many years (even decades). Is it really that plausible that merely tuning an algorithm on a dataset is enough to solve issue of fraud at this financial? It might sound a bit too good to be true. So let us instead consider a more plausible explanation of what just happened.

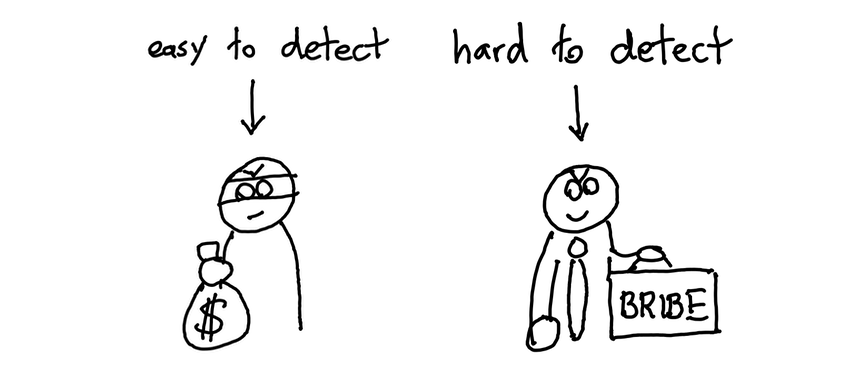

The typical reason why fraud use-cases are hard, even for machine learning algorithms, is not just because of the class imbalance. It is because you cannot trust the labels in the first place. The labels for ‘fraud’ are probably trustworthy, but the label indicating ‘no-fraud’ might very well be undetected fraud. It has probably never been checked by a human simply because there are so many cases to consider. It is also plausible that the fraud cases that did receive a label are the ones that were easy to detect. Your training data is bound to have more easy examples than hard ones.

So what happens when you present a fraud team with an ML algorithm that is very accurate? They will receive an algorithm that has a blind spot for very hard cases of fraud in favour of the easy ones.

Unfortunately this bias will propogate indefinately because when this algorithm is applied it will send teams to easy cases making sure that any new training data will also focus on it. The fact that your algorithm is highly accurate will only reinforce it’s application which in turn will keep giving the appearance like you are fixing fraud.

The naughty, but plausible, situation is that it is now harder to find the harder types of fraud because your model has been reported to be highly accurate.

Irony

It is because the model was reported to be highly accurate that people were less critical in the application of it. It’s not just in this theoretical fraud example, blind faith in machines has already caused deaths. The best example of this is the dozens of deaths caused by GPS in death valley. Instead of following the warning signs people blindly trusted their GPS devices to be more accurate.

It really is ironic. A 100% accurate model might cause blind faith, which will do horrible things for the application of the model while at the same time 100% accuracy is the goal we all try to achieve when we train our systems.

It not just fraud either. Can you imagine what it is like if doctors put blind trust in algorithms that detect diseases?

So maybe, when we think about the application of machine learning models, we should think about the feedback loop first. And maybe, just maybe, we need to serve highly accurate models with some entropy. Not so much entropy that people who rely on it won’t trust it but enough such that the people who rely on it don’t trust it blindly.

Blind faith is the expensive cost to success.

Appendix

Part of the inspiration of this piece came from an episode of cautionary tales. It’s a podcast that I can recommend. Many of the warnings in that podcast can be directly applied to the field of machine learning.