I read a paper the other day that starts by asking a great question.

Are We Modeling the Task or the Annotator?

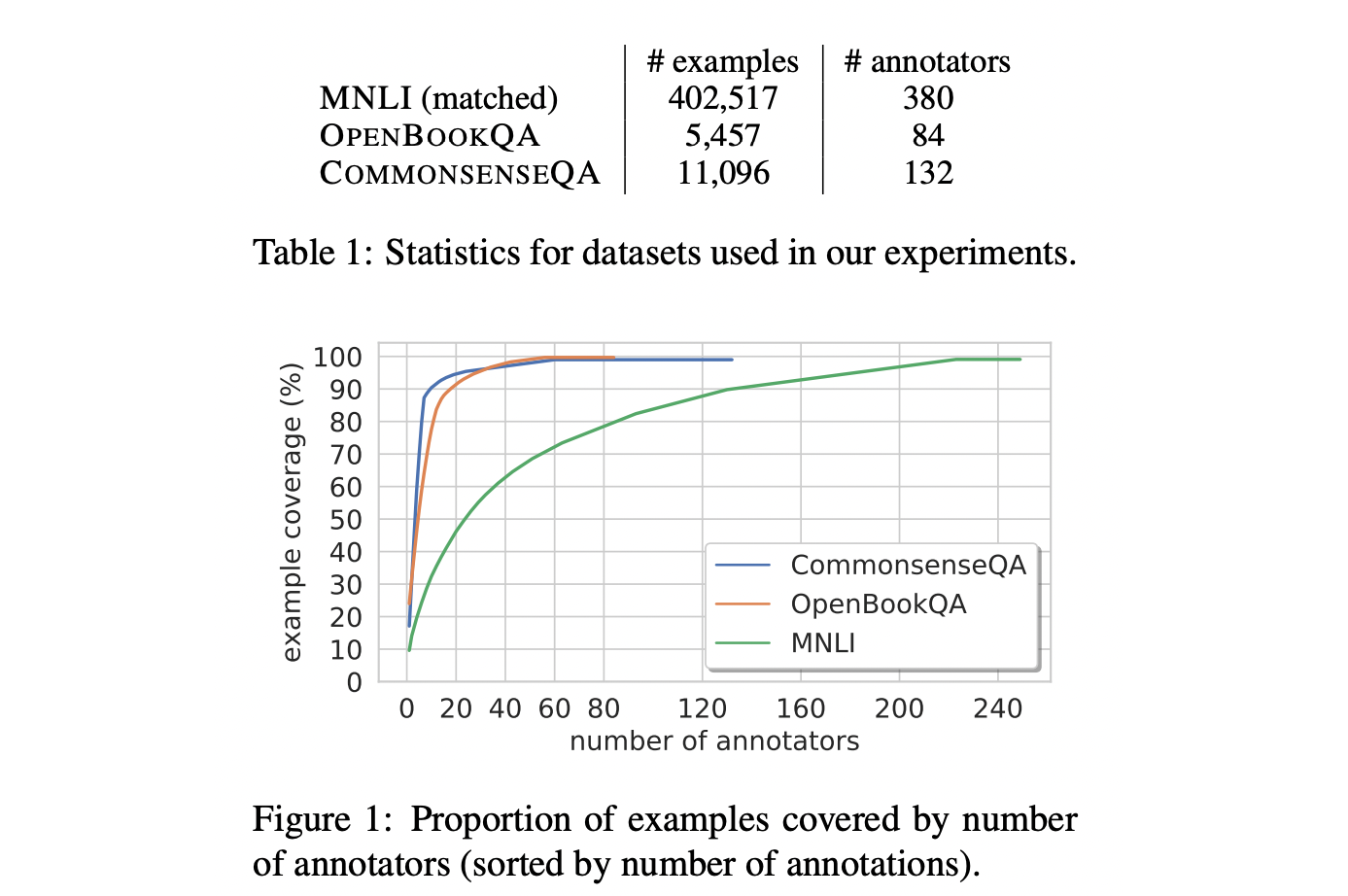

The paper makes a great point just by asking the question, but the authors do a couple of good experiments too. It seems like there’s a 20/80 rule in annotation tasks where it feels like 20% of the annotators might be annotating 80% of the data.

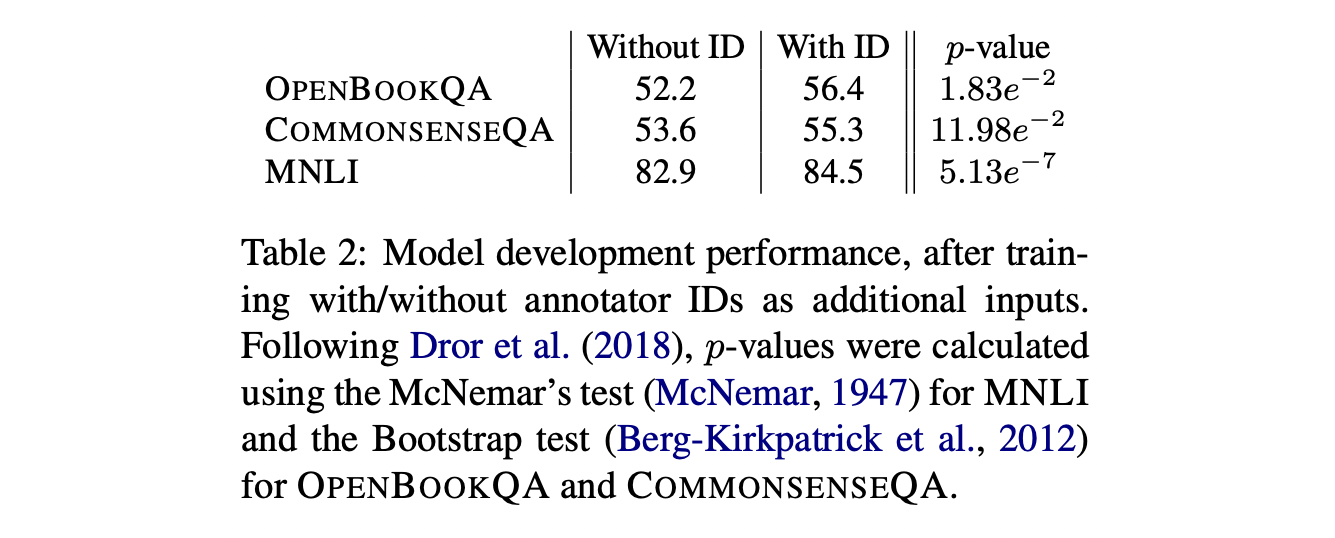

One of the experiments they report is that they added an annotator ID along with the task to a model. They show that model performance improves when training with annotator identifiers as features, and that models are able to recognize the most productive annotators.

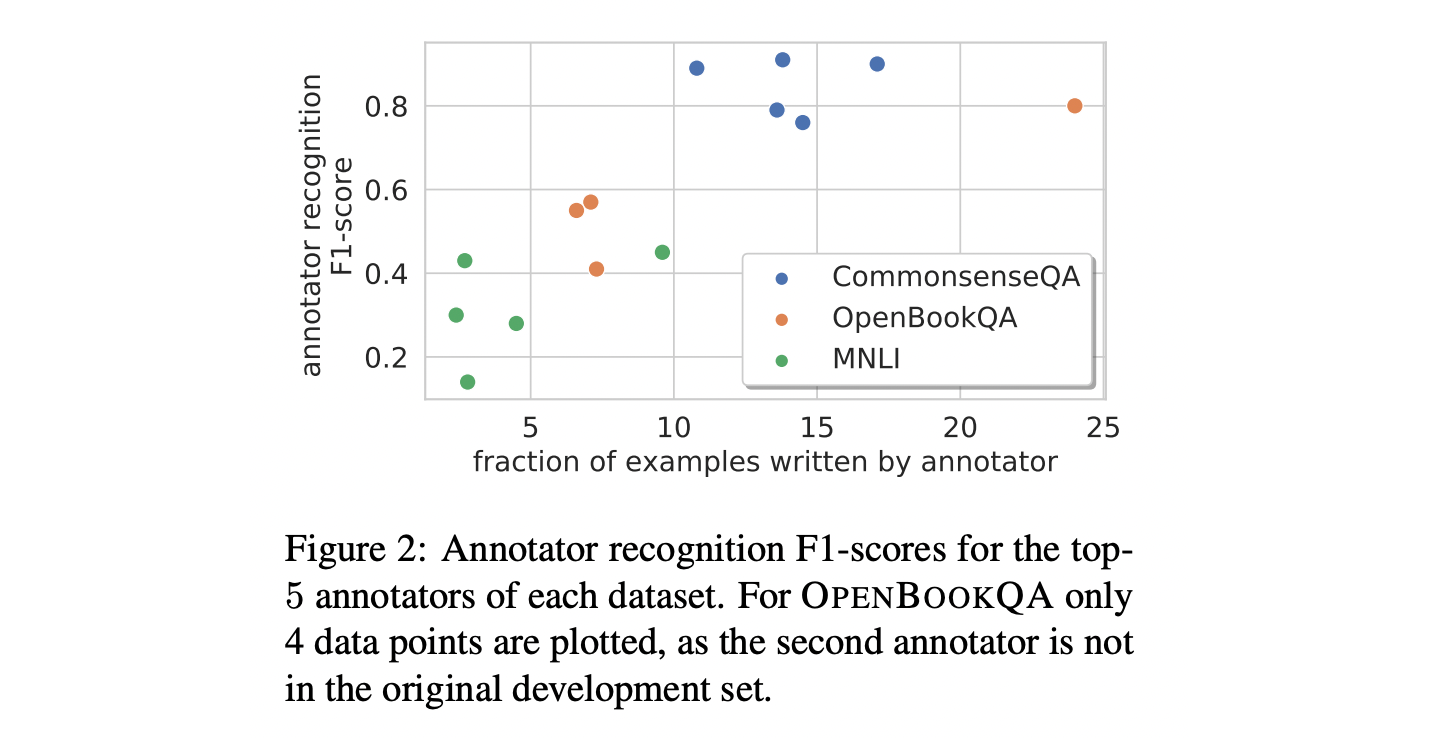

They then continue to try to predict the annotator ID. They limit themselves to the top-5 annotators and they get pretty high F1 scores on one of the datasets.

Being able to predict annotators is a pretty clear sign that something bad is happening because it suggests that the annotators disagree in a predictable manner.

The paper goes into more depth than I’ll go into here, but a part of the conclusion does deserve repeating.

Moreover, we tested the ability of models to generalize to unseen annotators in three recent NLU datasets, and found that in two of these datasets annotator bias is evident. These findings may be explained by the annotator distributions and the size of these datasets. Skewed annotator distributions with only a few annotators creating the vast majority of examples are more prone to biases.

GoEmotions

The results of the paper triggered me so I went ahead an tried running a similar experiment on the Google Emotions dataset. This dataset comes with annotator information so I was able to try out some things.

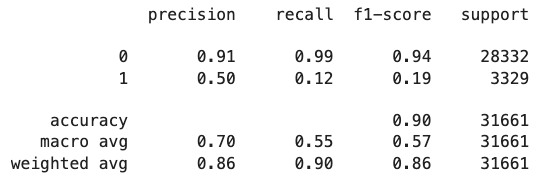

If I don’t add annotator information to try and predict the “excitement” emotion then I get this confusion matrix:

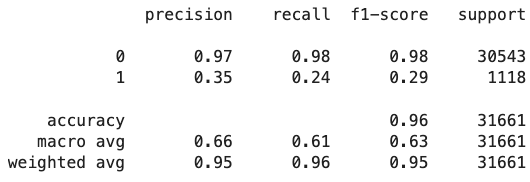

If I do add it, it gets more accurate!

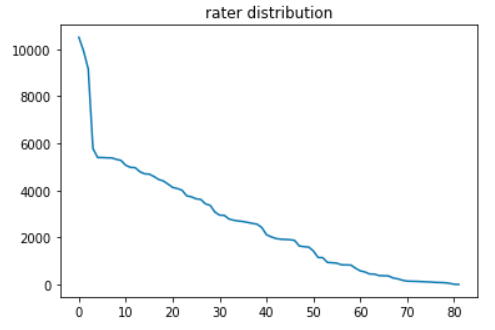

To make things more interesting, the number of annotations per rater tends to vary a bit.

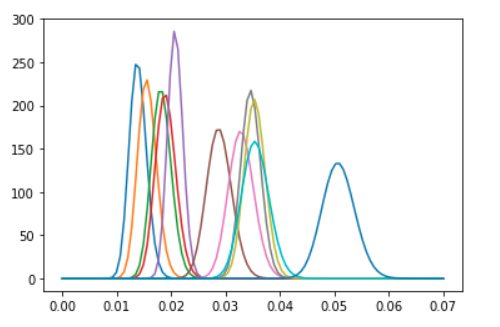

It’s not just that, it’s also that some annotators have a tendency to give more 1 labels than others.

Data quality remains a huge problem!