A while ago I got access to the Github Copilot program. I’ve yet to be impressed by the tool but I figured sharing some of the results that it generated.

I figured I should try writing some unit tests for it. So I wrote some tests that are able to remove the Rasa entity syntax from some strings.

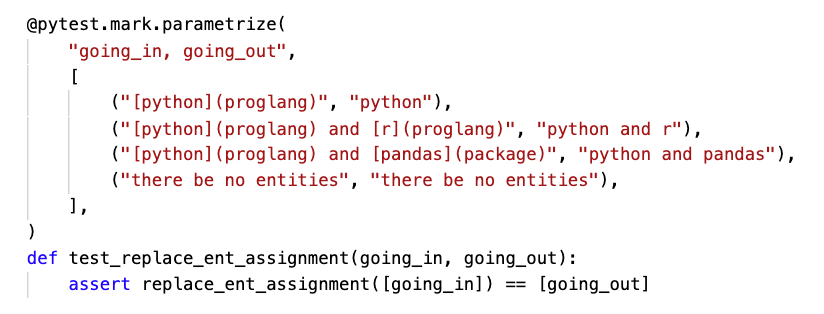

Figure 1: Before and after. Written by a human.

Note a few things.

- The function accepts a list of strings.

- The function outputs a list if strings.

- Inside of the test I’m wrapping the

going_inandgoing_outvariables to accomodate for this.

New Test

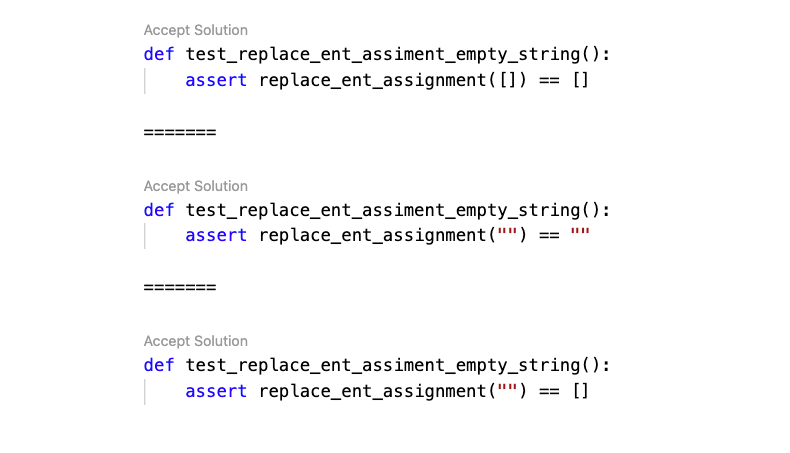

Next, I wrote def test_replace_ent_assignment_empty_string and asked co-pilot to complete the text. Here’s what it came up with.

Figure 2: All these examples are incorrect.

Technically, all of these tests are wrong. It fails to understand that I want a list with an empty string inside of the body. The output should also equal a list with an empty string.

I can imagine how these samples got generated. But they are all wrong.

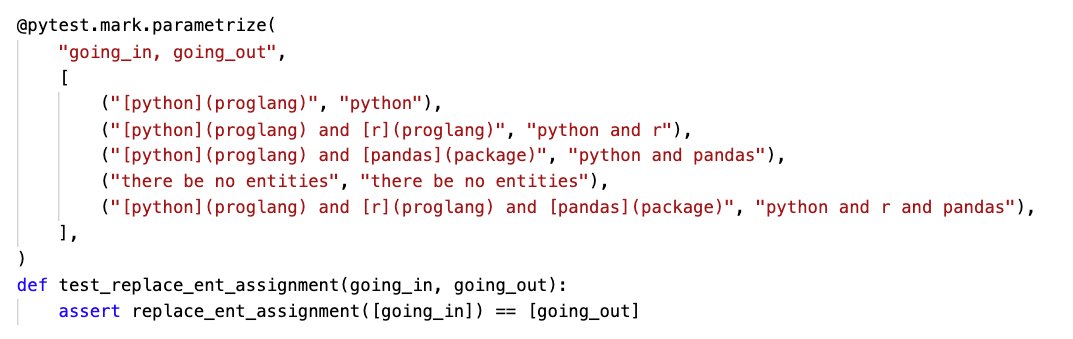

New Parameters

Instead of generating a new test, I wondered if we could get the algorithm to generate new examples for the original test. So I asked the algorithm to generate one example. It generated right after the "there be no entities" example.

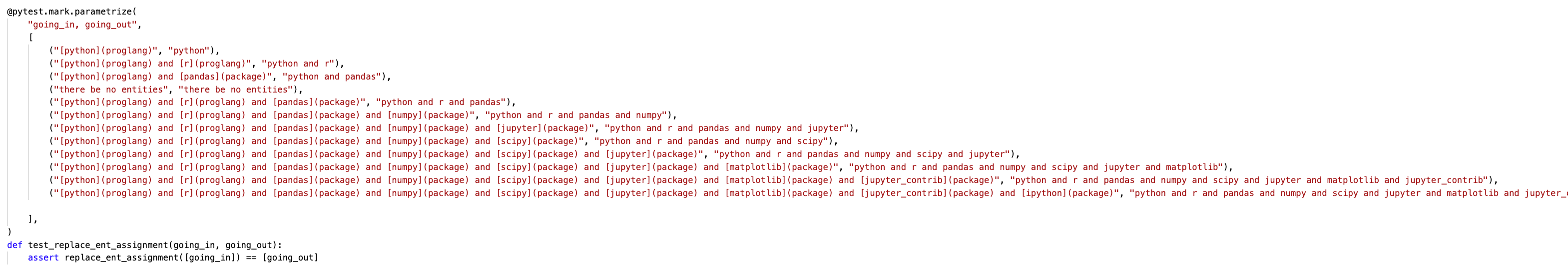

This was a fair example. Then this happened.

It seems to have generated appropriate candidates inside of parameterize, but part of me isn’t that impressed. It’s still very much a “repeating parrot”-kind of behavior, not the behavior of a pair programmer.